Hi Zikka1. Mate a lot in that post of yours. Awesome :-)

zikka1 wrote: Data mining for trading strategies: The presumption is guilty until proven innocent. I get that and if you're trying to curve-fit/weed out all the drawdowns/risk, even worse.What is their fundamental role? Is it to speed up backtesting (and save time finding edges) and avoid coding? My sense is that most people use these softwares, check the performance over 10 years of data (maybe as few as 20 trades), see beautiful curves with beautiful monte-carlos on single symbols and think generating strategies is super easy.

I think convenience has got a lot to do with the popularity of the method for many and particularly the ability to generate trade-able solutions which do not require coding......but there is a very useful element of the process that tends to be overlooked by many. It provides a much faster basis for hypothesis testing assumptions you make about the behaviour of a market.

No matter if we use a data mining method or simply our own discretionary or other systematic method for developing strategies, the results are always curve fit from the get go. Curve fitting is a necessity for any successful strategy but the key factor that determines whether an edge is present is associated with whether that curve fit result is associated with a causal or non-causal relationship with the market data. We need our strategies to be curve fit to a particular emergent market condition that is enduring into the future rather than simply being curve fit to past market data that has no causative relationship and future longevity.

The latter non-causal relationship is what you have got to watch out for and data mining without a bit of preliminary guidance actually exponentially increases that possibility of simply generating a solution that is linked to 'noise' as opposed to an underlying causal pattern that has future endurance if you simply let data mining do it's own thing.

There are many more possible 'emergent patterns' arising from the impacts of noise than the emergent patterns that arise from a causative 'push factor'. Left to it's own devices, data mining will simply multiply your problems in seeking a system with an edge. The universe of possibilities generated by data mining is huge given the speed and scope of it's capabilities with current technology....but the vast majority of the outcomes generated are unlikely to lead to an enduring edge but they all will be deftly 'fitted' to historic market data.

But provided you restrict it's capabilities within a predefined logic that you can clearly see will result in a desired outcome if the market exhibits a particular behaviour, then you can significantly narrow down the scope of outputs that fit within the realm of your hypothesis. This is when data mining then becomes an invaluable tool to test that logic and validate whether the majority of solutions generated have a slight positive expectancy over that market condition that can test that hypothesis within high confidence levels.

zikka1 wrote: Logic: What if the software generated a strategy that made no intutitive sense but works across, in your example, 20-25 markets and is robust otherwise: large sample of trades, 2 parameters only etc.; should such a strategy be discarded? I see that even QIM say that their collection of strategies explains certain behaviours, which sounds like they are looking for logic also; and Rentech also at least tries to find logic. The answer may be quite simple though i.e. " strategies that generalize well ACROSS markets and have only 2 parameters/are simple are very easy to understand anyway so the question is moot" I know a couple of CTAS with a couple of decades of experience each and they wouldn't generally trade a straegy they don't understand - something like High[2] > [Low[5] and Low[3] > Low[8] would make them very skeptical.

Given the complexity of markets IMO it is possible that there are solutions of no intuitive sense that actually have an edge.... but there is just no way that I can confidently test that this is the likely case. I think the reason the professionals also avoid these solutions is that they like to tie their conclusions to an underlying hypothesis of how they feel markets actually operate. This gives them confidence to stick to their rules when times get tough and also allows them to apply this principle in different contexts. Once you have an understanding of the 'causative' factors that give rise to the result, then you tend to trust in those rules and stick with them and can also apply them as general market principles.

For example if I assume that trends are a phenomenon associated with an 'enduring' participant behaviour of fear and greed, and design a model that simply follows price with a trailing stop and open ended profit condition (around which I loosely data mine to provide design variations) ..then I can assume that in an uncertain future, that the 'causative' behaviour will remain in place. This gives me confidence to hold onto these strategies even during tough times. It also gives me confidence that this 'causative factor' can be tradeable in other contexts (eg. is a behaviour common to all liquid markets).

It is a bit like learning a new language. If like a robot you simply utter a phrase that has no connection to the underlying logical meaning then it cannot be applied to different contexts , even if by luck the uttered phrase actually makes sense in that context. If however you understand the 'causal roots' of that language and how to then apply that language to different contexts then meaning can be applied. Simply restating that phrase in a different context may lose the causal connection of the intent. So in the data mining context....a solution (a phrase) needs to be connected to a causal factor to be able to be confidently applied in an uncertain future.

I personally feel that understanding the 'causal' factors to that solution is imperative as it then gives a basis to understanding the why's that result from performance. Why did it fail or why did it succeed? It gives you a context for your experiment and a firmer grip on the inherent strengths and weaknesses of your system before you then you step into an unknown future with risk capital at stake.

zikka1 wrote:Single market "edge": what if the strategy only works on 1 market or only across a series of CORELATED markets e.g. an overnight premium strategy only working on index futures in the U.S. - that would certainly not be multi-market. Couldn’t there be other effects that are specific to a particular market? Won’t an insistence on a strategy generalizing across markets lead to many missed discoveries? Perhaps, the answer is that the missed discoveries are a price worth paying as it will generally also weed out of a of false discoveries, which are more dangerous. Alternatively, one could say that if it doesn’t generalize well, one takes higher "overfitting risks" with such a strategy and if he is cognisant of that, should perhaps only allocate a much smaller % of capital to strategies that do not generalize well.

Totally agree mate. There are certain strategies that respond to a particular market condition that occur from time to time and can be attributed to a type of resonance that is market specific. These tend to be 'convergent' in nature. For example when markets are directionless and can exhibit a rhythmic oscillation around a fairly stationary equilibrium point. These oscillations are generally unique to a particular market and associated with a particular participant behaviour mix but are unlikely to be successfully applied across multi-markets...... unless of course that there is some very strong causal link between other markets to this phenomenon. With central bank intervention across asset classes (buying the dips and selling the tips), we have seen this feature spread across asset classes...but it is unlikely to be an enduring feature that can be relied on.

I personally prefer hunting for more permanent market features that are market ambivalent as opposed to less enduring market patterns that tend to be market specific. IMO there is more wealth gains to be had in mining for divergence than convergence. It is a far less competitive space as you really need patience to play it and confidence in your system....but that is why I feel that the edge is so enduring with divergent techniques and why they still haven't been arbitraged away.

That is just my preference though mate....and should not dissuade people from data mining for either divergent or convergent solutions. Both are valid IMO...however they are at either ends of the spectrum and as a result require different workflow processes to mine for them. It is not a one size fits all process. The process you use needs to tie to the logic of what you are trying to achieve.

zikka1 wrote:

No need for rigour - focus on survival: Generate 100's/1000's of strategies through data-ming quickly, no need to worry about logic or multi-market validation (only slippage/costs i.e. feasibility) and then run them on "demo" over 6 months (or 3 years years if you need more proof!) and select the ones that survived - lots of hours saved and lots of "false negatives" avoided. Some people swear by this approach vs other "rigorous" approach; their view is if it works (survival of the fittest) in live market, it is proof enough and the ultimate test Any thoughts?

Not sure mate. When in doubt I always refer to the long term track record of the best FM's in the world. This particular approach is yet to feature in that track record. It might still be a long time coming...or never at all.

While I like the idea of 'Survival of the fittest' it isn't a process I would deem worthy as a rigorous empirical method. There is almost an Artificial Intelligence (AI) flavour to the rationale where you pass the heavy lifting over to the machines but I have yet to see significant success in this arena. One of the big reasons it doesn't fly with me is that to actually demonstrate an edge....you need a really big trade sample size. A 3-5 year track record could just have easily been a simple 'lucky result'.

zikka1 wrote:Testing across markets: If one is testing across markets to validate a strategy, would it be better to a) test on one market (e.g. corn) and then validate on the other ten futures markets (which would then be the out of sample then) or b) test on all (all would be in sample but considering each market is so different, if it works on hundreds of years and trades of combined data even if in IS, does one need an out of sample) or c) test on all the markets in sample but split the data for each market such that each market has a portion of data, say 30pc, reserved as out of sample at the end of the data period (I.e. 30 pc of each symbols ending data would be oos), such that the in sample would be 70% of the data across the 10 symbols and out of sample would be the 30% of data across the 10 symbols?

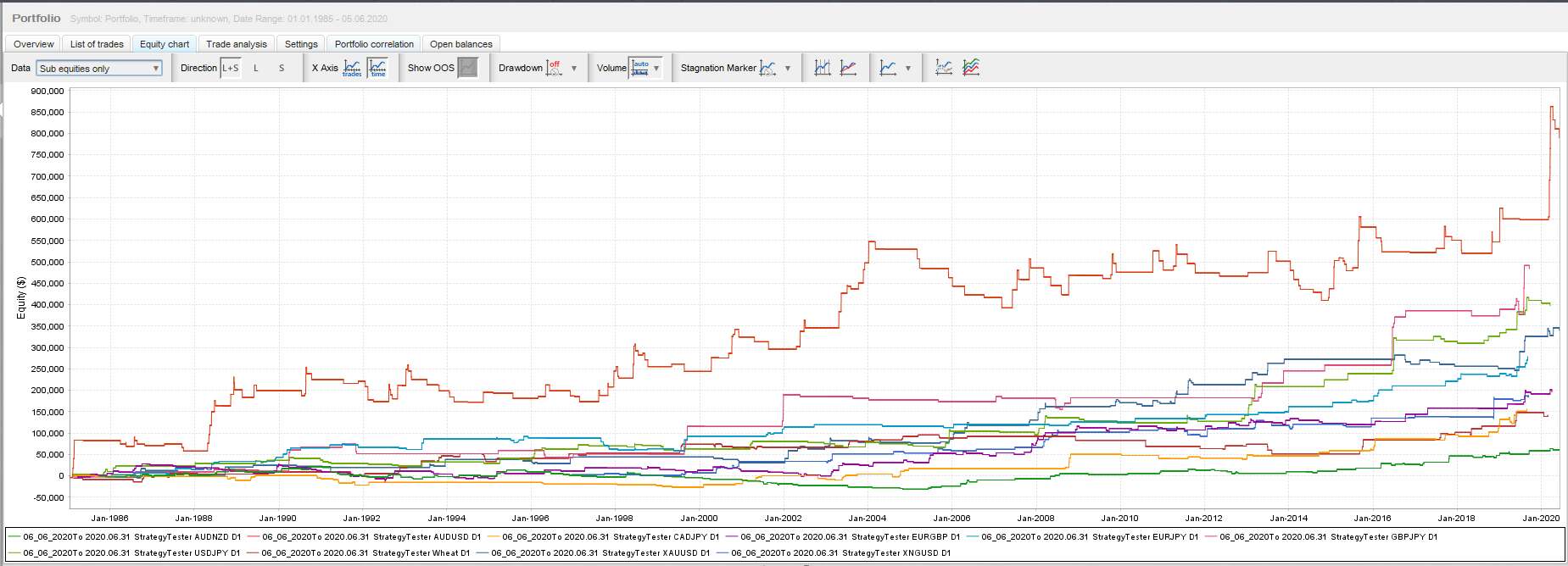

Yes mate. You have nailed it IMO. I actually separate my universe into 3 groups. Commodities, Equity Indices and Forex. I then undertake multi-market tests within each of these categories. These broad groupings are constructed differently and exhibit different global behaviours. I therefore would not recommend a one size fits all here. Equity Indices in their construction have a long bias and need to be treated differently in the data mining process. There is more commonality between Forex and Commodities, but I still prefer to split them up to play it safe.

Regarding OOS. This IMO depends on the confidence you have on the method. When I started the trend following workflow process without multi-market I would reserve about 50% for OOS. Over time and with the introduction of multi-market I became more and more confident that the 'core design' was actually preventing unfavourable curve fitting results...so I then tested the results using all In Sample with no OOS but with Multimarket. I then compared and contrasted the impact using Map to Market and found that the greater available market conditions used to build the strategies (In Sample) achieved the outcomes I was looking for. It became more important to me to have a larger In Sample than to reserve an amount of market data for OOS to test the validity of the strategy on unseen data.

When you get to a trade sample of say 10,000 trades using multimarket then it becomes clear if you have an enduring edge or not....Provided your trade sample size is sufficient enough then the need for OOS to test the validity of the strategy on unseen data loses its significance.

So in a nutshell I have more confidence in an edge that can be demonstrated by a 10,000 simulated trade backtest than an edge we might presume exists in a 200 live or demo trade out of sample phase. That OOS test suffers from the same lack of sample size that creates the problem in the first place. The emphasis therefore needs to be placed on ensuring that backtests correctly simulates the live trade environment and you can factor in conservative cost assumptions such as Slippage, varying SWAP and spread etc. to validate that simulation and ensure it is conservative in nature.

I don't place much validity in a method that rotates say monthly in and out of EAs that succeed or fail in that very short time interval based on short term data mining methods....but I would place more emphasis on a method that rotates between algorithms to 'sharpen the edge' regularly provided those same EAs can also demonstrate a very long term track record (say 20 to 30 years) under simulation. I admit that markets do adapt so you need some method to keep that edge sharp over time....even with trend following.

The parameters of the strategy need to stay but the values of those parameters need to be dynamic over time to reflect adaptive market conditions.

zikka1 wrote:Realistic returns: 20pc CAGR over a sustained period of time is considered world class for a hedge fund. The CTA performance graph you often post shows the average is closer to 8%. However, I often hear trades say "yes, that's because they manage billions of dollars, you can do much better as a retail trader". Is there any basis to this statement or is this another one of those myths with no evidence - Sure, I'd be super happy generating a 100% each year on my $100k and reach my million super fast (not greedy for the hundreds of millions  ), if this were true!

), if this were true!

I hear you mate. When I hear this bold rhetoric I tend to smile and quickly dispatch the discussion into the 'graveyard of trading myths'. It is not that people are necessarily 'lying' but rather that they simply do not know how to distinguish between random luck and an edge. I think an appreciation of the randomness that exists within complex system only really comes from a pretty deep understanding of how complex systems work.

Also I think people might confuse annual returns with CAGR. An 8% CAGR over a 20 year plus track record allows for some great years (60% plus) but it needs to be put in the context of those bad years or average years where you may get a 4% loss or a 2% gain any particular year. Once again it is a symptom of volatile equity curves and particularly attributed to the impact of compounding (leverage) on that return stream over the long term. Most traders never experience the windfall of compounding as they are not alive long enough to experience it.

All we need to validate this myth is proof of the pudding over say a 20 year trading history or say 10,000 live trades....then I would be prepared to at least consider what is being said.....the ultimate arbiter of truth is the validated track record which shows a very large trade sample size.

zikka1 wrote:FX: Professional manager friends have advised me to stay away from spot FX as a retail trader and focus on futures. Any thoughts on this?

They are right to a degree but forget that the finite capital available to us retail traders is a really big obstacle to achieve sufficient diversification. With a small capital base you simply cannot obtain the required degree of diversification in Futures that you can with Forex and CFD's through the retail broker.

The microlot offering in Forex and CFDs is a great thing that you don't have in Futures that allows you to heavily diversify....but there is a greater trading cost to this convenience which does put some extra challenges on the retail trader.

I personally prefer the Forex and CFD space to play this game.

Cheers Z. Thanks for the discussion. :-)