Topic: methodologies - Any empirical tests?

So there is a lot of discussion about how best to trade the generated EAs but so far i havent seen anything with any specific comparisons. Thinking in terms of using OOS data, demo before live or straight to live.

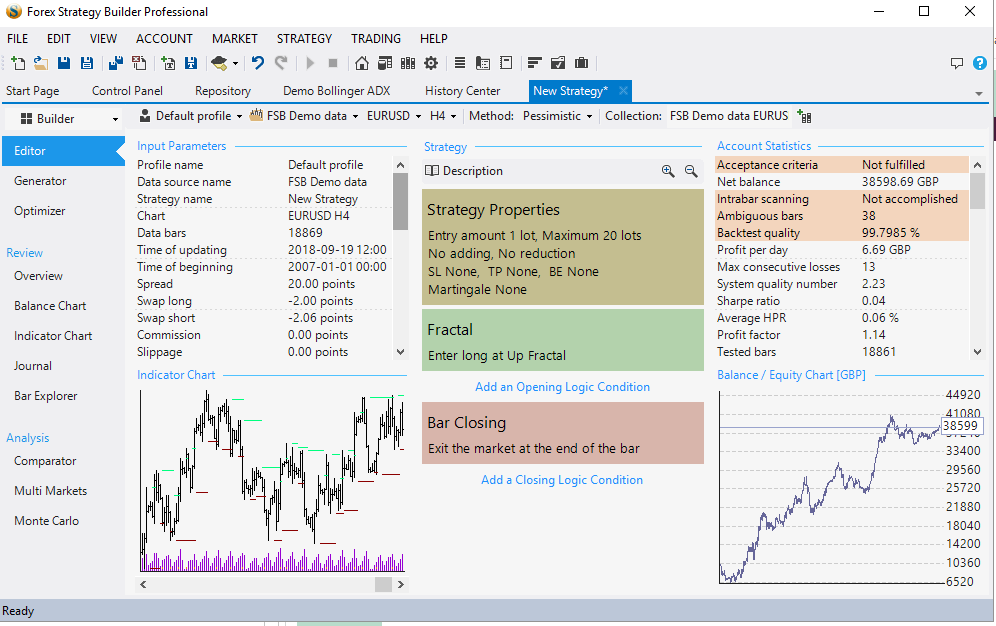

Has anyone tested each method to see which is best or is it all just opinions? From what i can see the idea of generating then going to a demo account is pretty pointless. Its no different than using OOS except you waste real time (like weeks, months) instead of moments using OOS in FSB. That just leaves using OOS or straight to live. For me the idea of going directly to live with a generated EA seems a bit of a risk. From the testing i have done in FSB using 20% OOS its pretty clear that over 80% of the strategies generated are simply artifacts of pure coincidence. This is evidenced by the fact you dont actually need any indication of where the OOS data starts when viewing the strategy. Its pretty clear as the equity line drops of a cliff. But OOS isnt perfect either. FSBs implementation is basic to say the least. Although i havent tried it yet from looking over the docs Strategy Quants implementation of OOS is much more geared to using progressive walk forward analysis and using the OOS as a tool that can actually give at least some indication of robustness.

As others have pointed out in other posts, using OOS (in FSB at least) can be as much pot luck as not using it as it could simply be that you have by luck selected the period where the market conditions for that generation still apply. They may not apply now. Its got to be worth some testing though.

I was thinking 2 demo accounts for test. Use short timeframe charts (so we can get a decent trade count, so perhaps m-5). The basic plan being take a 6 week data horizon. Generate the best 4 strategies on a given pair, one set of 4 using 20% / 25% OOS, the other using all the data in the generation. The best 4 would be ones where the equity line is still at a steep upwards angle at the very end of the 6 week period. Run them for one week then at the weekend bin them and do a new generation of 4 more of each as before. Repeat for a month. Its just an idea based on comments on other threads about other peoples preferred workflows so sort of covers most angles.

It would be interesting to see which approach worked best (if any have a profit at all). Unfortunately a 15 day trail isnt enough by a long way to get any useful data so i cant do this. Just thinking that surely someone must of done some actual testing to evidence that any methodology they use has some robustness.